This article explains how AI and automation reduce human risk in SOC operations and drive measurable improvements. You’ll see what problems they solve, which human risks matter most, and where AI fits in the SOC stack. We cover cutting alert fatigue, accelerating detection and investigation, and automating safe response. You’ll learn how identity, email, cloud, and supply-chain controls benefit, how CTI is operationalized, and how to measure ROI. Governance, model risk, explainability, human-in-the-loop design, build-vs-buy, validation paths, security of the AI itself, maturity stages, and near-term trends round it out. Eventus leverages AI and automation to reduce human risk in SOC operations, ensuring smarter, faster, and more reliable cybersecurity defense.

Table of Contents

What problem are we solving in SOC operations with AI and automation?

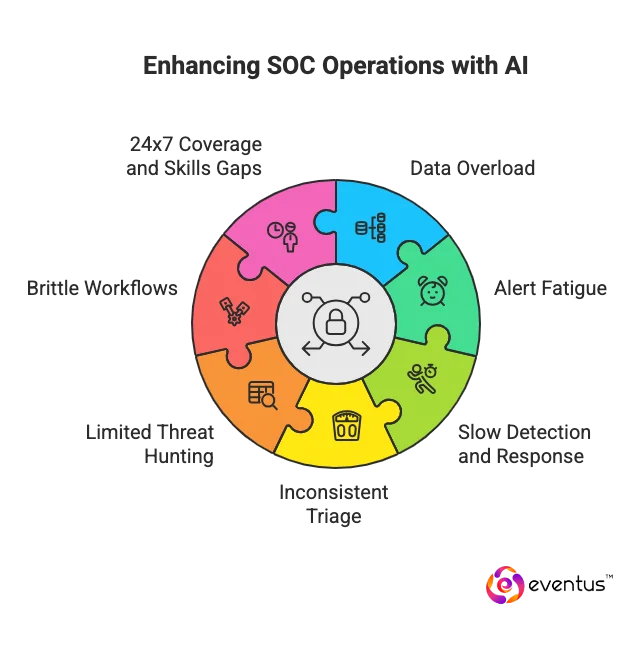

AI and automation in a best-managed security service provider solve the core problem of human-limited scale and speed in the face of exploding security data, diverse attack surfaces, and fragmented tools. Concretely, they address:

- Data overload from SIEM/EDR/cloud logs by using AI models and machine learning to normalize, correlate, and prioritize security data for SOC teams.

- Alert fatigue and false positives through ai-driven triage, deduplication, and risk scoring to significantly reduce non-actionable security alerts.

- Slow detection and response time by automating enrichment and playbooks so threat detection and response move from minutes/hours to near-real-time.

- Inconsistent triage by standardizing decisions across human analysts with automation plus human oversight and human judgment at defined thresholds.

- Limited threat hunting capacity by surfacing anomalies and linking threat intelligence to probable use cases for proactive security.

- Brittle, manual workflows by applying security orchestration to automate handoffs across security tools and reduce manual human intervention.

- 24×7 coverage and skills gaps by giving SOC analysts ai-driven copilots and an ai-driven SOC backbone that scales continuous operations and improves overall security posture.

Which human risks (fatigue, bias, delay, skill gaps) are most impactful today?

- Fatigue from nonstop alerts: Continuous paging and high alert volume in a traditional SOC degrade attention and decision quality, raising MTTD/MTTR and p0 escapes. SOC automation and ai-driven security are adopted primarily to reduce alert fatigue among security analysts facing complex security workloads and evolving security threats.

- Cognitive bias in triage and investigation: Recency, confirmation, and availability bias lead security teams to over- or under-weight certain indicators, causing missed security incidents and noisy escalations. An managed security services SOC that enhances correlation can counter skewed human priors, but still requires oversight.

- Operational delays under peak load: Queues form during bursty cyber threats, handoffs stall across tools, and manual enrichment slows containment. In a traditional security environment, these delays amplify blast radius; ai capabilities that automate enrichment and routing reduce clock time to isolate hosts or identities.

- Skill gaps across shifts and tiers: Variability in experience leads to inconsistent triage quality and playbook execution. This gap is most visible on nights/weekends. SOC automation codifies best practice so Tier-1 decisions match senior expectations, improving uniformity across security teams.

- Tool fragmentation and swivel-chair risk: Moving between consoles increases copy-paste errors and lost context. In a traditional SOC, this directly drives investigation mistakes; ai-driven security that stitches entities and timelines lowers error rates and rework.

- Procedural drift and runbook noncompliance: Under pressure, analysts skip steps or improvise. That drift leads to incomplete evidence and weak containment. Automation guards rails execution so critical steps occur in order.

- Escalation bias and over-escalation: To stay safe, junior analysts escalate too much, flooding responders and delaying true priority work. AI-assisted scoring and narrative summaries help right-size escalations without suppressing critical cases.

- Knowledge silos and onboarding lag: Tribal knowledge locked in senior staff slows new-hire ramp and incident handoffs. AI SOC assistants surface context and prior cases inline, reducing reliance on scarce experts.

Where does AI fit in a modern SOC reference architecture?

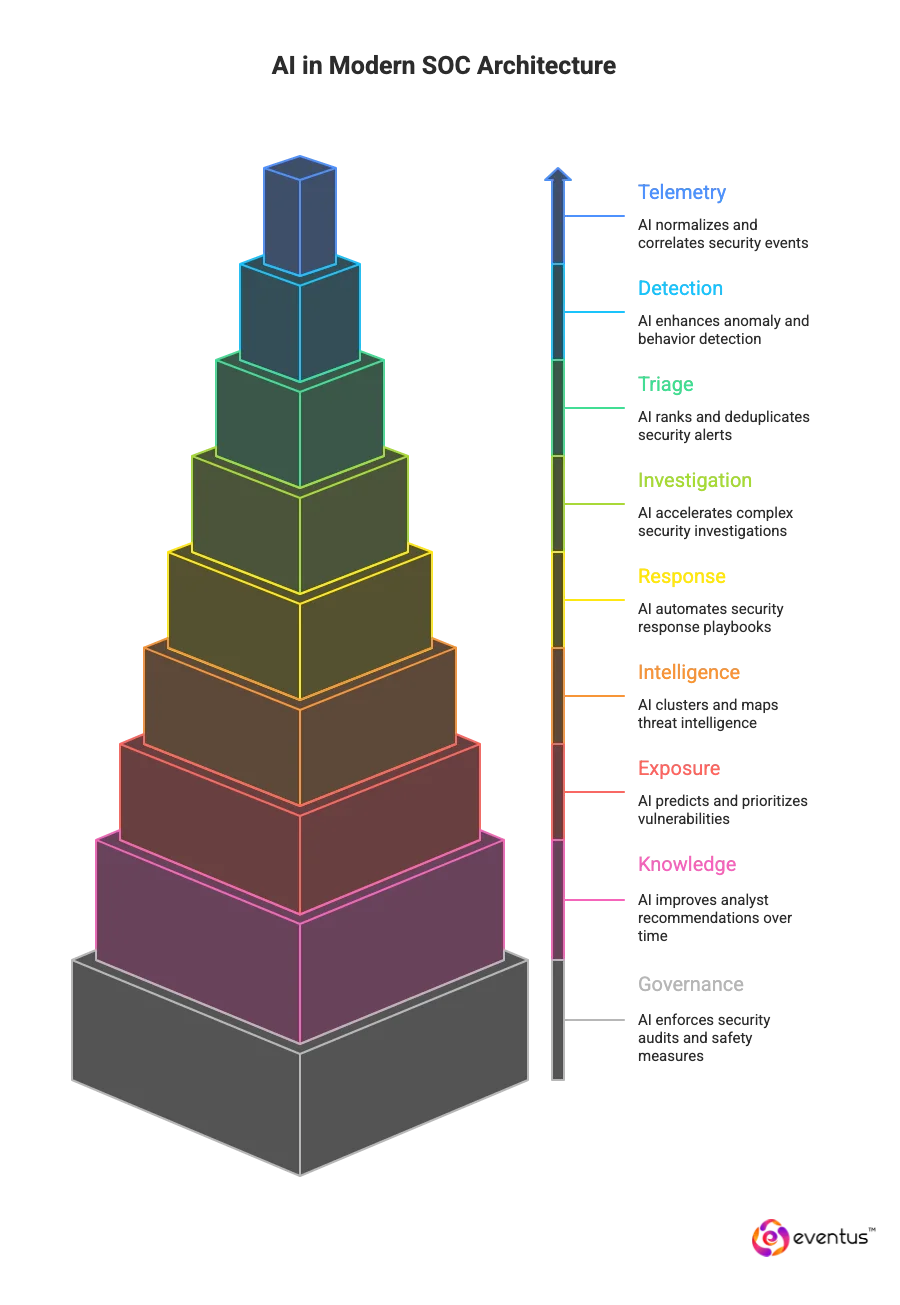

- Telemetry layer: Use AI and machine learning on security events to normalize, enrich, and correlate at ingest so ai-driven analytics surface real security threats and suppress noise.

- Detection: Apply advanced AI to anomaly and behavior models to lift true positives beyond traditional security operations.

- Triage: Rank and deduplicate alerts with ai-driven security operations to cut queues and variance; soc automation scales prioritization.

- Investigation copilots: Use generative AI to stitch timelines and test hypotheses so complex investigations finish faster and consistently.

- Response orchestration: Automate playbooks (isolate host, reset credentials, block IOCs) with approvals and rollback; keep human oversight for high-impact steps.

- Threat intelligence: Cluster and map TTPs with AI systems so detections stay aligned to active campaigns.

- Exposure management: Use advanced AI models to predict vulnerabilities and preemptively strengthen security through prioritized fixes.

- Knowledge management: Capture analyst decisions so an ai-powered managed security service provider solutions improves recommendations shift over shift.

- Governance and safety: Enforce audit trails, confidence scores, kill switches; avoid a false sense of security and overreliance on AI.

- Placement and scale: Integrate AI in SIEM, SOAR, EDR/XDR, CTI, and ITSM APIs to enable security teams to leverage AI without rewrites; automation can handle repetitive tasks.

How does AI reduce alert fatigue without missing real threats?

- Correlate and deduplicate at ingest: AI-driven analytics enable SOCs to cluster duplicate security events, merge artifacts by entity (user/host/identity), and suppress redundant notifications—cutting noise while preserving the highest-fidelity case.

- Risk scoring with business context: AI in cybersecurity ranks alerts by asset criticality, blast radius, and kill-chain stage so analysts see real security threats first; automation helps route only high-risk queues.

- Adaptive thresholds, not static rules: Models learn baseline behavior per user/app and auto-tune thresholds to the environment; this reduces false positives without hiding rare but dangerous anomalies.

- Intent- and sequence-aware detection: Sequence models evaluate event order (e.g., MFA push abuse → token misuse → data access) to keep chains that matter and drop isolated, low-signal churn.

- Human-in-the-loop feedback loops: An AI SOC analyst marks outcomes (true/false); the system retrains to reflect ground truth. This tight loop trims noise while maintaining recall on evolving tactics.

- Safeguarded suppression: Suppression rules include decay timers, confidence bounds, and “never suppress” tags for crown-jewel assets; ai is designed with kill switches and audit trails to avoid blind spots.

- Shadow-mode validation before auto-action: AI-driven SOC automation runs in parallel to measure precision/recall, then graduates to action only when it meets agreed SLOs—preventing missed incidents during rollout.

- Playbook triage with explainability: Advanced SOC automation attaches evidence (signals, paths, and scores) so security analysts can quickly accept/override decisions, keeping control during complex investigations and strategic security reviews.

- Exposure signals feed detection: When ai models can predict vulnerabilities on key assets, detections for those assets receive higher priority, turning the SOC from reactive to targeted.

- Scale by design: Soc automation refers to deterministic playbooks; ai enhances SOC with learning systems so automation enables security operations at scale and transforming SOC operations without sacrificing true-positive sensitivity.

How can AI speed up detection and investigation?

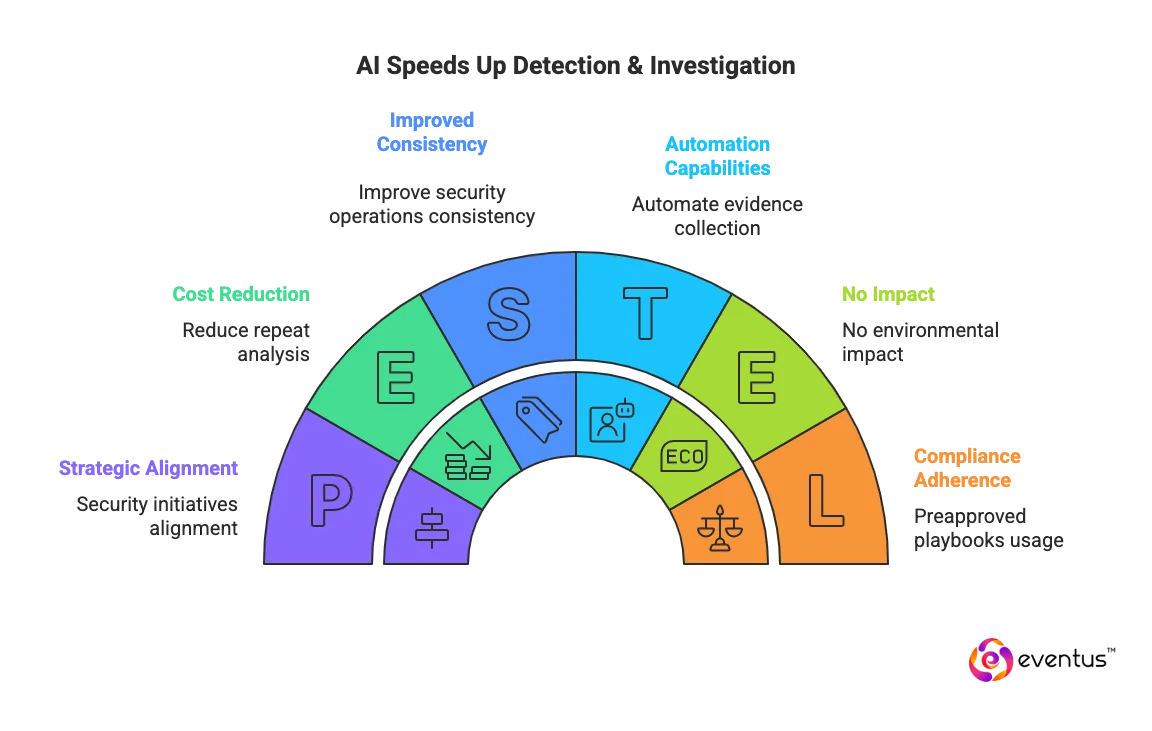

- Streamline ingest and correlation: AI is transforming cybersecurity operations by normalizing high-volume telemetry and performing entity resolution in real time, so related events collapse into a single case and MTTD drops.

- Behavior and sequence detection: Advanced models learn baselines per user/host/app and detect kill-chain sequences, surfacing true anomalies faster than rule-only systems for more effective security operations.

- Automated enrichment at first touch: SOC automation solutions pull threat intel, asset context, vulnerability data, and identity posture into the alert automatically, removing manual lookups from tasks in a security operations workflow.

- Graph-based timeline building: Automation capabilities assemble event graphs (login → privilege change → data access) instantly, which helps SOC analysts pivot fewer times to establish scope.

- LLM copilots for investigation: Copilots generate queries, summarize evidence, and propose next steps, accelerating triage while keeping analyst control and improving security operations consistency.

- Risk-based prioritization and routing: AI scores cases by blast radius and business criticality, auto-routing to the right queue, which shortens wait time to first action across common SOC use cases.

- Autonomous evidence collection: Automation refers to the use of preapproved playbooks to fetch artifacts (process trees, DNS records, email headers) and attach them to the case, compressing investigative cycles.

- Case summaries and knowledge reuse: Automation and advanced retrieval surface similar past incidents and proven fixes, reducing repeat analysis and aligning work with strategic security initiatives.

How Eventus measure risk reduction and prove ROI?

- Baseline & A/B: Compare pre-AI vs post-AI windows; run shadow A/B to isolate automation impact.

- Core risk deltas: Track MTTD, MTTR, dwell time, P0/P1 escapes, recurrence, false-negative rate.

- Quality, not just speed: Measure precision/recall, false-positive rate, auto-closure accuracy, analyst override rate.

- Translate to cost: Hours saved × cases/month × fully loaded rate; add tool consolidation and avoided IR spend.

- Expected loss: ΔEL = Σ[(prob_before − prob_after) × impact]. Include downtime avoided.

- Scale with load: Cases/FTE and events/sec hold steady as volume of security grows.

- Significance & SLOs: Use proper stats on deltas; enforce SLOs (e.g., MTTD ≤ 5 min, MTTR ≤ 30 min) and error budgets.

- ROI: (Quantified benefits − Total costs) ÷ Total costs, including platform, compute, storage, integration, model ops.

How do Eventus secure the AI itself and its data?

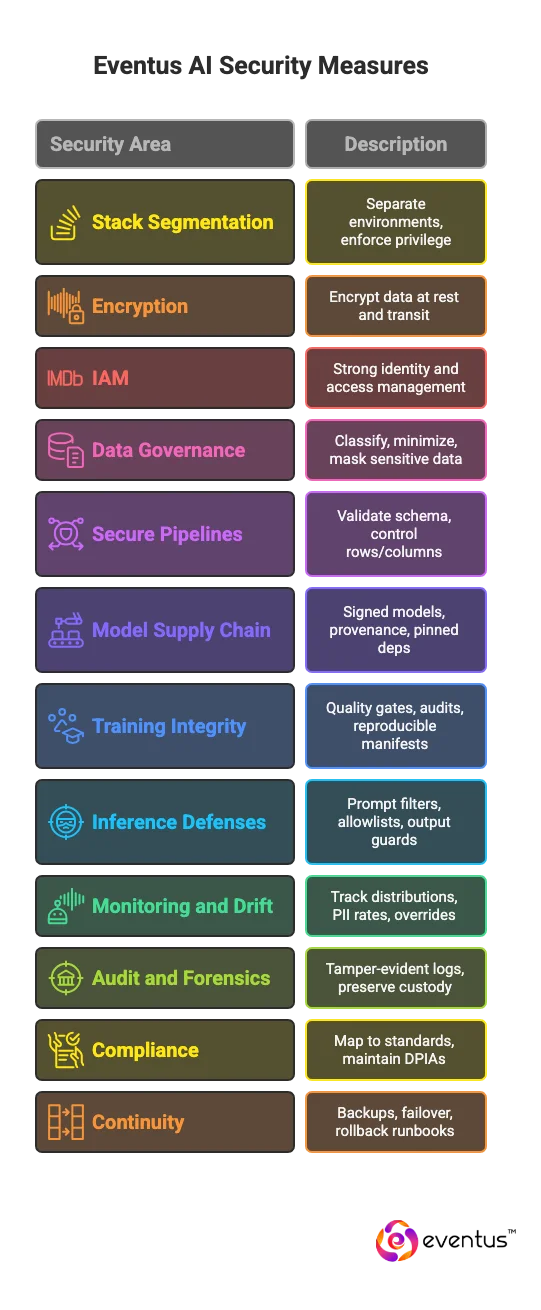

- Segment the stack: Separate training, fine-tuning, inference, storage; enforce least privilege and one-way flows.

- Encrypt by default: KMS/HSM envelope at rest; TLS 1.3 in transit; auto-rotate keys; forbid plaintext export.

- Strong IAM: SSO, MFA, JIT access, RBAC/ABAC, tenant isolation; log and review all privileged actions.

- Data governance: Classify, minimize, mask/tokenize sensitive fields; policy-based retention; opt-out of training on customer prompts.

- Secure pipelines: Schema validation, row/column controls, checksums; only signed datasets and SBOM-tracked artifacts.

- Model supply chain: Signed models/containers, provenance (SLSA), pinned deps, CVE scans; block unsigned weights.

- Training integrity: Quality gates, outlier and label audits, canary and holdouts; reproducible manifests.

- Inference defenses: Prompt-injection filters, tool allowlists, output guards, rate limits, hard kill switches.

- Monitoring and drift: Track input/output distributions, PII/secrets rates, overrides; auto rollback or retrain on thresholds.

- Audit and forensics: Tamper-evident logs of prompts, tool calls, versions, features; preserve chain of custody.

- Compliance: Map to SOC 2, ISO 27001, PCI, HIPAA, GDPR; maintain DPIAs and data maps.

- Continuity: Backups, cross-region failover, model rollback runbooks, token and secret revocation.

What does a maturity roadmap for AI-enabled SOC look like?

- L1 Assistive: Centralized logs, basic correlation; MTTD > 60m, MTTR > 8h; exit = data SLAs + starter playbooks.

- L2 Orchestrated: SOAR with approvals; 15–30% tasks automated; MTTD 30–60m, MTTR 2–8h; exit = versioning + rollback.

- L3 AI-assisted: Entity stitching, risk scoring, LLMs; 40–60% triage automated; MTTD 5–30m, MTTR 1–3h, precision 50–70%; exit = stable P/R, <10% overrides.

- L4 Targeted autonomous: Pre-approved auto-actions; 60–80% high-volume flows; MTTD ≤ 5m, MTTR 15–60m, P0 < 1%; exit = red-team pass + drift monitoring.

- L5 Predictive/self-optimizing: Exposure-driven detection, self-tuning playbooks; 80%+ repetitive work automated; MTTR < 30m, precision > 80%, cases/FTE 2–3×; exit = SLOs met ≥ 3 quarters.

- Enablers (all levels): High-quality telemetry; strong IAM, encryption, audit; post-incident learning; A/B validation; ongoing analyst upskilling.

What future trends will change SOC automation in the next 24 months?

- Agentic copilots: LLMs execute bounded playbooks with approval; Tier-1 load ↓ 30–50%.

- Exposure-driven detection: CTEM prioritizes high-risk assets; routing times shrink.

- Graph + vector correlation: Cross-tool entity linking cuts pivots and lateral-movement misses.

- Identity-first automation: Session risk scoring, MFA-fatigue catch, JIT revocation with rollback.

- eBPF/Kubernetes telemetry: Precise process/network signals enable safer cloud containment.

- Signed supply chain: SLSA/SBOM-gated models, code, and connectors block tampering.

- Multimodal phishing: Text+image+link modeling raises precision; allowlists keep recall.

- Continuous purple-team: Staged ATT&CK emulation validates detections before promotion.

- Model risk SLOs: Drift/bias/adversarial tests gate automation; auto-pause on confidence drops.

- Policy-aware auto-actions: Playbooks embed SOC 2/ISO/PCI/HIPAA gates with audit trails.

- Cost-optimized inference: MoE + small task models handle 70–80% of routine actions.

- SaaS/OAuth governance: Continuous token hygiene and anomaly scoring, not quarterly checks.