This article maps how artificial intelligence powers deepfake technology and AI voice cloning, and why the deepfake threat represents a new wave of deception. It defines deepfakes and cloned voice tactics, shows how deepfakes for fraud escalate financial fraud and reputational risk, and lists common public scams.

Table of Contents

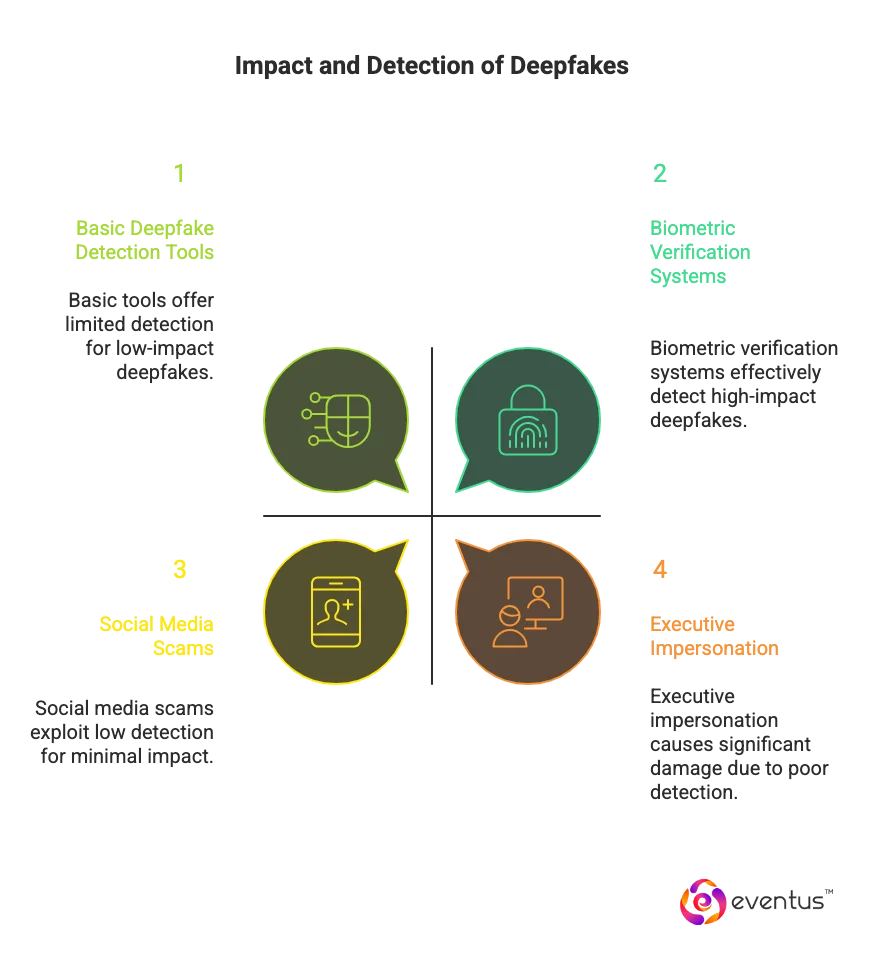

It outlines “trust but verify” methods and how to detect deepfakes with deepfake detection tools. Finally, it covers victim response steps, organizational defenses, and policy moves — highlighting the vital role of Managed SOC Services in strengthening detection, response, and digital trust. It also explores what the future of authenticated media and AI-powered cybersecurity will require for long-term resilience.

What Are Deepfakes and Voice Clones?

Deepfakes and voice clones are AI-generated synthetic media created through advanced deep learning models such as generative adversarial networks (GANs). These systems use large datasets of audio, images, and video to replicate human likeness or speech with high precision.

In a deepfake, artificial intelligence replaces or manipulates a person’s face, voice, or actions to create convincing fake video or audio that appears authentic. AI voice cloning applies the same process to generate a cloned voice from short voice samples, allowing scammers to impersonate real individuals.

This synthetic media has become a powerful tool for malicious actors who use deepfake technology to conduct fraud, identity theft, and social engineering scams. Deepfakes for fraud can deceive targets during video calls, mimic executives in business email compromise cases, or exploit emotional trust in romance scams and financial fraud.

The deepfake threat extends beyond personal loss—it erodes trust, damages reputation, and undermines authentication systems, including biometric verification.

As AI evolves, Eventus AI-Driven SOC and Managed SOC Services leverage deepfake detection and liveness verification to mitigate AI-based deception.

What Differentiates Deepfakes From Traditional Digital Manipulation?

| Aspect | Deepfakes | Traditional Digital Manipulation |

| Technology Used | Created using generative AI, deep learning, and adversarial machine learning models that autonomously learn to recreate face and voice patterns. | Relies on manual editing tools such as Photoshop or After Effects, requiring human effort and visible frame-by-frame alterations. |

| Creation Method | Uses AI tools and deepfake creation processes powered by large language models and generative adversarial networks (GANs) to produce realistic deepfake videos and audio deepfakes. | Involves traditional editing of video content, images, or sound files without using AI-driven synthesis. |

| Output Quality | Produces convincing deepfakes that mimic tone, emotion, and behavior—often indistinguishable from real footage. | Output is typically limited by human skill; signs of alteration like mismatched lighting or unnatural transitions are easier to detect. |

| Scalability | Can generate fake accounts, synthetic identities, or thousands of deepfake images rapidly using AI and deepfakes automation. | Labor-intensive, slower to produce, and difficult to scale across multiple pieces of content. |

| Fraud and Misuse | Used in deepfake scams, phishing, robocalls, and political manipulation, often by malicious actors to cause reputational damage or financial loss. | Historically used for isolated cases of fraudulent editing or minor disinformation campaigns with limited impact. |

| Detection Difficulty | Requires deepfake analysis, audio deepfake detection, and detection models to uncover inconsistencies or manipulated pixels and sound frequencies. | Detection is simpler using visual inspection or traditional forensic tools since changes are typically non-AI-generated. |

| Nature of Deception | Reconstructs reality through synthetic media, enabling advanced deepfakes that can impersonate voices, simulate video calls, and deceive authentication systems. | Alters or enhances existing visuals without recreating an entire fake identity or generating new synthetic behavior. |

| Impact on Society | Leads to deepfake fraud, cyber deception, and loss of trust in digital communication; threatens verification and authentication integrity. | Causes limited harm compared to AI-based fakes, mostly affecting perception rather than eroding systemic trust. |

| Examples of Misuse | Deepfake Biden robocall, AI chatbots imitating real people, audio recordings used in impersonation scams, and social engineering tactics exploiting calls or video. | Photo doctoring in tabloids, altered news footage, or edited video content for propaganda. |

| Response Measures | Combated through detection capabilities, combat this threat initiatives, and development of detection technology to identify deepfake evidence. | Addressed through manual verification, metadata inspection, and traditional authenticity checks. |

How Can SOC Teams Detect and Defend Against Deepfakes and Voice Clones in the Age of AI Fraud?

As AI-driven SOC as a Service platforms evolve, managed SOC as a Service providers are strengthening defenses against synthetic identity attacks such as deepfakes and voice clones. These threats use AI-generated content to impersonate executives, manipulate communications, and trigger fraudulent transactions. The best managed security service providers now integrate AI-driven verification and behavioral analysis to protect businesses against these emerging risks.

Detection

- SOC teams under SOC as a Service providers employ deepfake detection tools to uncover manipulation artifacts in both visual and audio data.

- Apply voice biometrics to catch cadence and timbre mismatches in clones.

- Verify provenance via metadata checks and C2PA/CAI signatures.

- Managed security service provider platforms correlate user behavior and device data to identify anomalies linked to synthetic identity campaigns.

- Ingest threat intel on synthetic-identity campaigns to enrich alerts.

Defense

- Require secondary verification (MFA, callback, signed approvals) for high-risk requests.

- Enforce zero-trust for voice/video instructions without cryptographic or contextual proof.

- Automate triage in SOAR integration, with human-in-the-loop for final validation.

- Run deepfake-focused awareness drills and simulations for staff.

- Preserve chain-of-custody and audit trails for synthetic-media incidents.

Why are Deepfakes Becoming More Accessible and Widespread?

Here’s why:

- Cheap compute + open models: It lowers barriers to development and use, enabling anyone to create a convincing deepfake.

- Turnkey toolchains: They let malicious actors use pretrained pipelines for epfake audio and video without expertise.

- Abundant training data: Social platforms provide audio or video voice samples for voice cloning and deepfake.

- Higher-fidelity generators: They drive the rise of deepfake realism, increasing the impact of deepfakes.

- Easy distribution at scale: It via bots, DMs, and robocalls accelerates deepfake disinformation.

- Weak verification habits (no separate communication channel): It means targets could fall and be duped into transferring funds.

- Remote-first workflows and constant video call usage broaden targets for deepfake attacks.

- Uneven defenses: Limited deepfake analysis and detection models—leave gaps attackers exploit.

What are the Most Common Deepfake Scams Targeting the Public?

Given below are the most common deepfake scams:

- Celebrity or influencer deepfake investment/offer scams: A deepfake video shows a trusted public figure endorsing a “limited-time investment” or “mega deal” to lure victims. Case study: Two Bengaluru residents lost about ₹87 lakh after seeing deepfake videos of N. R. Narayana Murthy and Mukesh Ambani promoting a fraudulent trading platform.

- Voice-cloning / impersonation scams (relatives, bosses, authority voices): A voice clone of someone you know (family/friend/colleague) asks for urgent money or personal details. The emotional pressure plus plausible voice makes it effective. Case study: In Kozhikode, a man lost ₹40,000 after receiving a WhatsApp video-call impersonating his long-time friend, using AI-deepfake tech for the face-swap.

- Deepfake-enabled “Shopping / Festive Offer” scams: Around festival time or big sale events, a branded spokesperson or celebrity appears in a deepfake video promoting huge discounts or refunds, linking to a fraudulent site/app. A video with a deep-fake voice of Nirmala Sitharaman and journalist Sweta Singh promoting a fake investment project was analysed and found manipulated using AI audio/video.

- Fake loan apps / extortion via deepfake porn/manipulated content: A user uploads personal ID or photos to a loan-app; later a deepfake video or image is made and used for blackmail (“we will share this video if you don’t pay”). Case study: A Bengaluru 79-yr-old woman lost nearly ₹35 lakh after scammers used deep-fake videos of Narayana Murthy on a fake UK trading platform and then attempted extortion.

- Deepfakes in stock / crypto investment & trading scams: Deepfake videos show business-celebrities or finance leaders giving stock/crypto tips; victims deposit funds into fake platforms. Case study: In Dehradun/Noida, a man lost ₹66 lakh after watching a fake video of the Finance Minister and registering on a scam crypto-investment site.

What Should You Do If You’re a Victim of Deepfake or Voice Clone Fraud?

Here’s what to do if you’re a victim of deepfake or voice clone fraud:

| Category | India | United States |

| Relevant Laws | - IT Act, 2000 (Sections 66D – cheating by impersonation, 67 – obscene content)

- IPC (defamation, identity fraud) - Personality rights recognised by Indian courts (Delhi HC rulings) |

- FTC Act (prohibits deceptive practices) - State laws like Tennessee’s ELVIS Act protect likeness and voice

- Identity Theft and Assumption Deterrence Act |

| Primary Authority | - Local Police Cyber Crime Cell / CIU

- National Cyber Crime Reporting Portal (cybercrime.gov.in) |

- Federal Trade Commission (FTC)

- Local/State Police - FBI Internet Crime Complaint Center (IC3.gov) |

| Immediate Steps | - Preserve all evidence (video, messages, screenshots)

- Report to local police or cyber cell - File complaint on cybercrime.gov.in - Notify the bank if money is involved - Report deepfake content to platform for takedown |

- Save all proof of scam (video/audio files, messages)

- Report to FTC via ReportFraud.ftc.gov - File report with local/state police - Contact your bank or credit card provider - Report to IC3.gov if online fraud is involved |

| Legal Remedies | - File FIR for cheating, defamation, impersonation

- Send cease & desist or injunction notice via lawyer - Request takedown under IT Rules (Intermediary Guidelines) |

- File criminal complaint for identity theft or wire fraud

- Request removal from platforms citing right of publicity or impersonation - Seek damages for reputational harm under state laws |

| Financial Protection | - Inform your bank immediately and request freeze/reversal

- Track suspicious UPI or wallet transactions |

- Contact your bank and credit bureaus

- Place fraud alerts or credit freeze - Monitor credit reports for new accounts |

| Prevention Tips | - Verify any “urgent” calls using a known number

- Use code words for family verification - Avoid sharing personal media online |

- Hang up and verify caller identity separately \

- Don’t respond to “urgent” requests over call/text - Use strong privacy settings on social media |

What Does the Future of Trust Look Like in the Age of AI?

Here’s what it looks like:

- The future of trust in the age of AI will depend on verifiable authenticity rather than assumed credibility.

- Digital trust frameworks will evolve to include AI-driven verification systems capable of validating synthetic media, voice clones, and deepfake content in real time.

- Blockchain-backed provenance and watermarking standards will help trace the origin of AI-generated material and distinguish genuine from manipulated data.

- Authentication technologies such as liveness detection, biometric layering, and device-based identity proofs will strengthen validation during communication and transactions.

- Public awareness and digital literacy will become essential, as individuals learn to question and confirm sources instead of relying on visual or auditory cues.

- Organizations will adopt zero-trust principles beyond networks—extending them to people, content, and AI systems to prevent deception and data misuse.

- Over time, trust will shift from the perception of truth to the proof of truth, where verified identity and provenance define credibility in an AI-driven world.

Building Trust in the Age of AI Deception

As deepfakes and voice cloning grow more sophisticated, they blur the line between real and synthetic — challenging every layer of digital trust. Organizations can no longer rely on traditional defenses; they need AI-driven SOC capabilities and Managed SOC Services that continuously learn, adapt, and respond to emerging threats.

With Eventus AI-Driven SOC in the Cloud, enterprises gain smarter visibility, faster detection, and stronger resilience against AI-powered deception. From proactive threat intelligence to automated response orchestration, Eventus empowers your security team to stay ahead of cybercriminals — no matter how convincing the fraud becomes.

Because in the age of AI, trust isn’t given — it’s verified. And Eventus is your trusted partner in securing that future.

Ready to outsmart AI-driven fraud?

Discover how Eventus AI-Driven Managed SOC Services can protect your business from deepfakes, voice clones, and next-generation cyber threats.

FAQs

Q1. Can deepfake detection tools guarantee 100% accuracy?

Ans: No, detection tools reduce risk but cannot guarantee complete accuracy against evolving AI models.

Q2. Are there laws specifically against deepfake creation?

Ans: Some countries like the U.S. and India are drafting or updating laws to criminalize malicious deepfake usage.

Q3. How can organizations train employees to recognize deepfakes?

Ans: Regular awareness programs and simulated attack exercises help employees identify fake voices or videos.

Q4. Do watermarking and blockchain help combat deepfakes?

Ans: Yes, they establish content authenticity by verifying digital provenance and preventing tampering.

Q5. What industries are most at risk from voice cloning fraud?

Ans: Finance, government, and corporate sectors face the highest risk due to frequent identity-based communications.